DeepRL-LTL

Deep Reinforcement Learning with LTL goals.

Project maintained by RickyMexx Hosted on GitHub Pages — Theme by mattgraham

DeepRL-LTL

The repository implements a Reinforcement Learning Task on a custom environment using temporal logic specifications. The project is based on the paper “Modular Deep Reinforcement Learning with Temporal Logic Specifications” (Lim Zun Yuan et al.) using a modified Soft-Actor-Critic algorithm based on the Py-Torch implementation provided at Py-Torch SAC.

Table Of Contents

The Environment

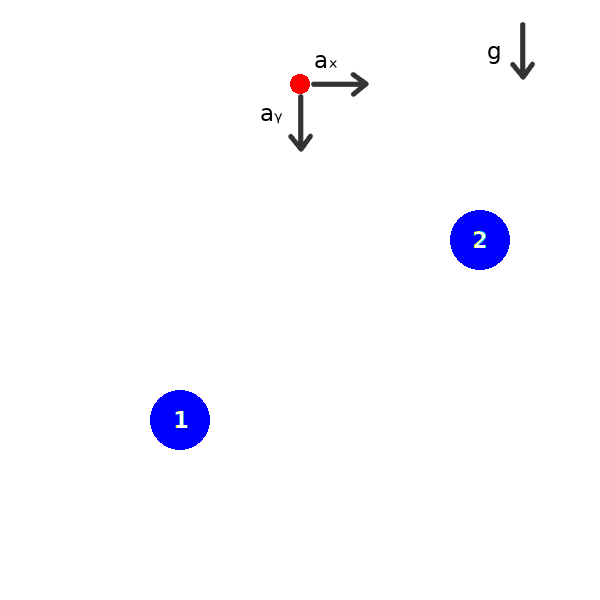

The task is performed on a custom environment developed using Gym-OpenAI and consists in the agent (the ball) going through the two circles in a specified order: bottom-left, top-right. The 6-dimensional state consists of the position and velocity along the x and y axes and two binary values (one for each circle) specifying whether the agent has gone through a circle.

Results

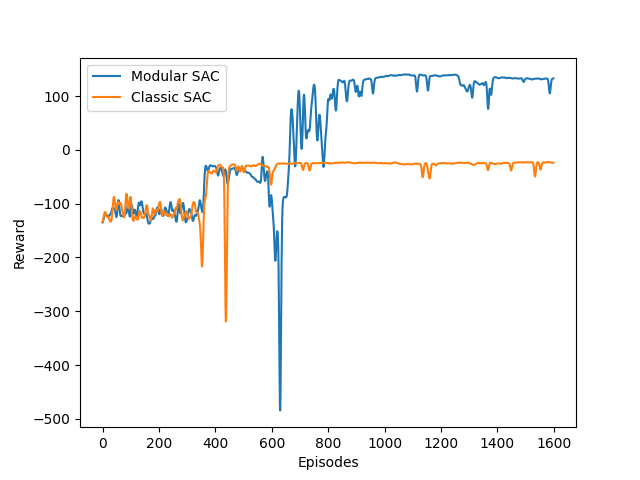

Two agents have been trained on the task: one given by the “classic” SAC algorithm with no modification, while a second one trained as described in the paper (Lim Zun Yuan et al. [1]) with a modular design that separates the task in two sub-goals.

We show how SAC alone (on the left) can easily reach the first goal but fails to reach the second even though the state provides the agent with the information about having reached the first circle. The modular agent (on the right), instead, is able to completely solve the specified task.

Installation

Requirements

Installation of RAEnv

cd ra-gym

pip install -e .

Installation of SAC

git clone https://github.com/pranz24/pytorch-soft-actor-critic

cp sac_modular.py pytorch-soft-actor-critic

Train Classic SAC

cd pytorch-soft-actor-critic

python sac_modular.py --batch_size 64 --automatic_entropy_tuning True

Train Modular SAC

cd pytorch-soft-actor-critic

python sac_modular.py --batch_size 64 --automatic_entropy_tuning True --modular

Presentation

Credits

References

- Lim Zun Yuan, Mohammadhosein Hasanbeig, Alessandro Abate, and Daniel Kroening. Modular Deep Reinforcement Learning with Temporal Logic Specifications. Department of Computer Science, University of Oxford.

- Mohammadhosein Hasanbeig, Alessandro Abate, and Daniel Kroening. Certified Reinforcement Learning with Logic Guidance.

- Icarte, R. T., Klassen, T., Valenzano, R., & McIlraith, S. (2018, July). Using reward machines for high-level task specification and decomposition in reinforcement learning. In International Conference on Machine Learning (pp. 2107-2116).